We BuildAssistantEnterprise AIGovernment AIGenerative AIFor Your Business

The innovative technology supports a wide range of industries, offering tailored solutions to meet the unique needs of each client.

Trusted by Industry Leaders

Join the companies that trust our solutions for their digital presence.

Our Products

Make the best models with the best data. Scale Data Engine powers nearly every major foundation model, and with Scale GenAI Platform, leverages your enterprise data to unlock the value of AI.

AI & ML Solutions

Harness the power of artificial intelligence and machine learning to gain insights, automate processes, and create smart applications.

AI & ML Solutions

Harness the power of artificial intelligence and machine learning to gain insights, automate processes, and create smart applications.

What we offer:

- Predictive analytics models

- Natural language processing

- Recommendation systems

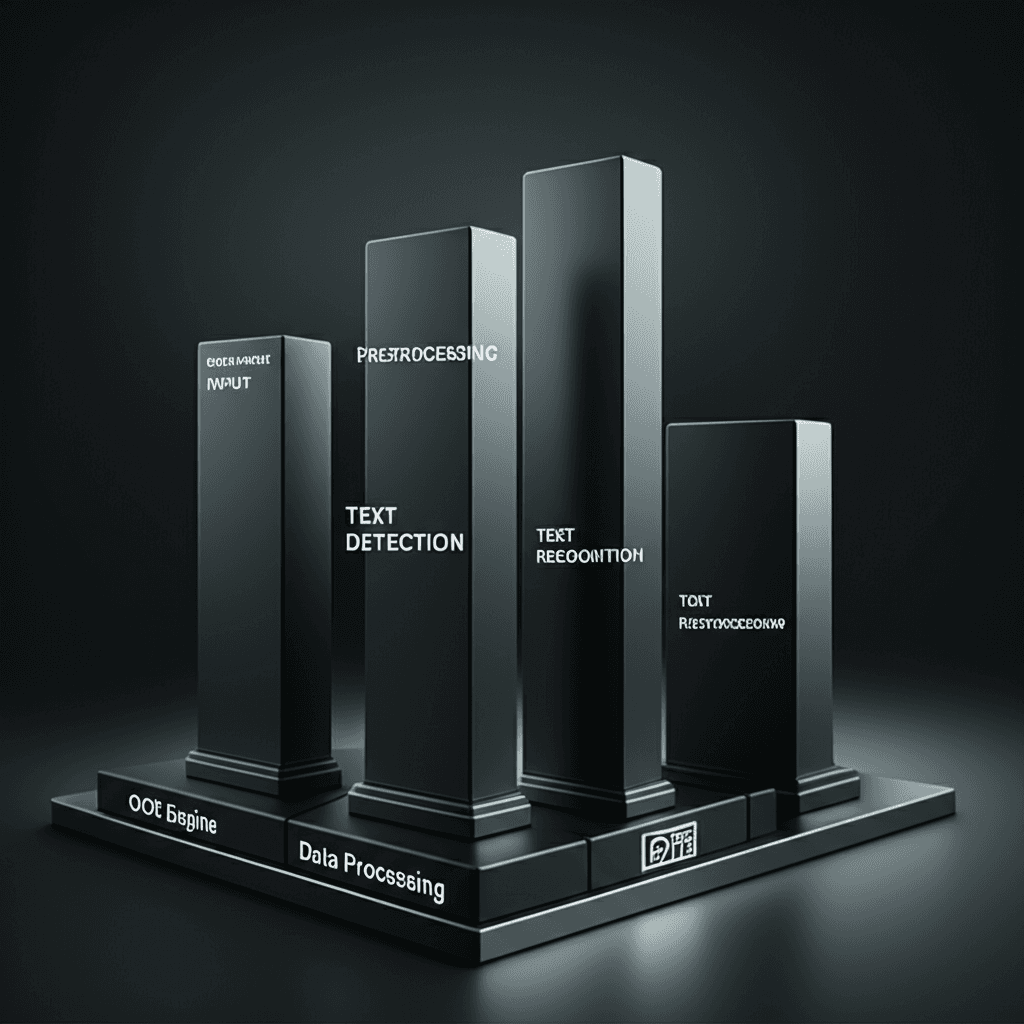

Generative AI Architecture

OCR/KYC& Enterprise Data

We utilize advanced OCR/KYC technology to securely extract key information from your documents, integrating it seamlessly into a structured database. By combining this extracted data with existing business information, we enrich your enterprise dataset. This comprehensive data fuels a custom-trained generative AI model designed specifically to enhance your business analytics and drive tangible value.

Advanced Technology

AI-Powered Solutions for Modern Businesses

Our cutting-edge AI solutions help businesses automate processes, gain valuable insights, and stay ahead of the competition. By leveraging machine learning and data analytics, we create customized solutions to address your specific challenges.

- Intelligent automation of repetitive tasks

- Predictive analytics for data-driven decisions

- Natural language processing capabilities

- Seamless integration with existing systems

Intelligent Model Routing & Selection Solution

Generative AI Assistant Proxy

Your single gateway to the world's best generative AI models. Simplify integration, optimize costs, and route requests intelligently.

- Intelligent Model Routing & Selection

- Cost Tracking & Usage Monitoring

- Generative AI Assistant with limitless context

- Multi Threading Chatbot

- Enabling Personalization feature

Try our Generative AI Assistant Proxy

Select the perfect package for your needs, with flexible pricing options designed to scale with your projects.

Starter

Perfect for individuals and small projects.

- Premium model access

- Standard support

- Basic Analytics

- Limited context memory

- Web search

Plan Includes:

- 150 Premium credits per month

- Rate limit:20 req/2h

- Receive 1500 standard message credits permium

- Image Generation: 50/month

Pro

Ideal for professionals and growing businesses.

- All model groups access

- Priority support

- Advanced analytics

- Token efficiency insights

- Full context memory

- Web search

- Knowledge access

- Custom model tuning

- Jupyter environment

Plan Includes:

- 400 Premium credits per month

- Rate limit: 60 req/2h

- Additional Premium credits can be purchased separately

- Unlimited standard message credits

- Image generation 200/month

Enterprise

For organizations with advanced needs.

- All model groups access

- Dedicated support

- Custom rate limits

- Advanced analytics

- SLA Guarantees

- Custom model tuning

- Fine-tuning available

- Full context memory

- Web search

- Knowledge access

- Jupyter environment

Plan Includes:

- Unlimited Requests

- Image generation : 200/month

All plans include a 7-day free trial. No credit card required.

Need a custom solution? Contact our sales team.

What Our Clients Say

Discover how we've helped businesses elevate their digital presence with our modern design solutions.

“One of the most useful tools among other AI chat bot. I used it for about 1 years and for my experiences I think this gives me way more than the big company chat bot does. Their strength is to answer our questions that we expected it to be answered. Also the Kiri2.0 model has strong knowledge in performing coding, analysis, and general knowledge.”

“Chat.Blizzer has transformed my daily workflow. I can jump between all the best AI models while being in one place. Honestly, I was skeptical at first, but now I catch myself recommending it to colleagues almost daily.”